Function Calling with Spring AI

A simple Spring application using Open AI's function calling feature

08/08/2024

Introduction

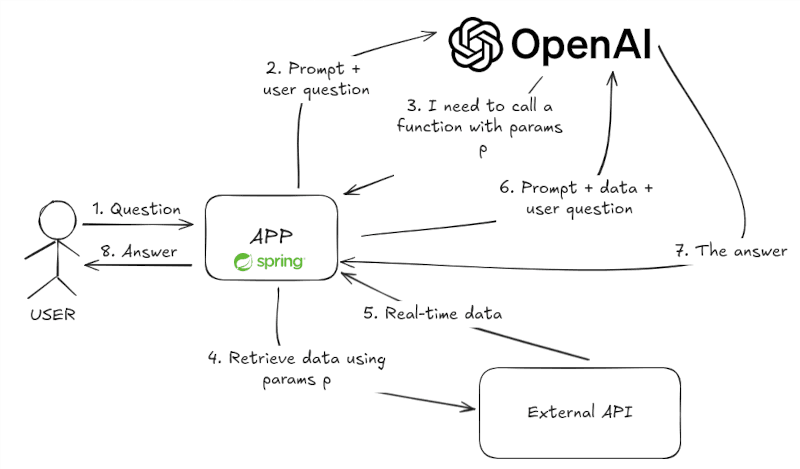

According to OpenAI, function calling is the ability to connect large language models (LLMs) to external functionalities such as real-time services, databases, etc, so the AI assistant can "call" functions or tools in order to provide answers based on external data. Since the LLM is not actually capable of calling functions, the idea is to get output from the LLM in JSON format, so your application can properly select and invoke a function. This function will either provide data to be fed back to the LLM or just perform an external action.

In this short tutorial, we will create a Spring application that answers the user's question about the cryptocurrency market using a chat model from OpenAI and an external API for retrieving quotation data. The LLM model will decide whether or not to call the external function based on the user's prompt and provide basic assessments about the market. The diagram below illustrates how this process work:

The project

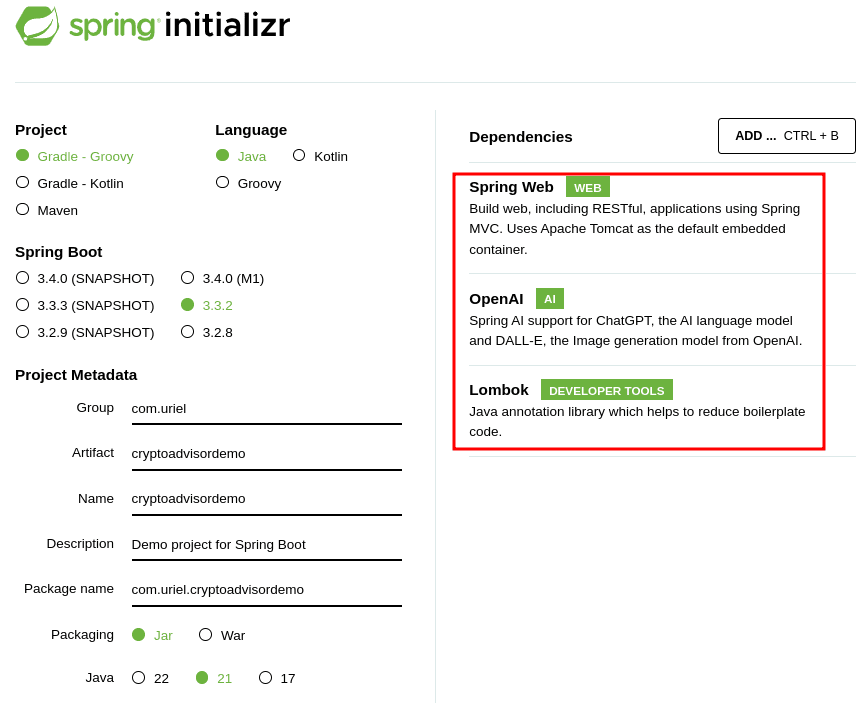

Let's get started by creating a new Spring project with spring initializr. For this demo application I will be using Spring Web, OpenAI, and Lombok.

Then, let's set up a few properties in our application.properties file:

spring.application.name=cryptoadvisordemo

# OpenAI API parameters

spring.ai.openai.base-url=https://api.openai.com

spring.ai.openai.api-key=${OPENAI_API_KEY}

# Application specific properties

application.chat.model=gpt-3.5-turbo

application.chat.temperature=0.1f

application.chat.max-tokens=200The OpenAI API key will be provided via the environment variable OPEN_API_KEY. Let's create a configuration properties file for the chat parameters:

@Configuration

@ConfigurationProperties(prefix = "application.chat")

@Setter

@Getter

public class ChatProperties {

private String model;

private Float temperature;

private Integer maxTokens;

}Don't forget to enable the configuration properties in the main application file:

@SpringBootApplication

@EnableConfigurationProperties(ChatProperties.class)

public class CryptoadvisordemoApplication {

public static void main(String[] args) {

SpringApplication.run(CryptoadvisordemoApplication.class, args);

}

}A simple overview endpoint

It's always good to start simple. Let's create an endpoint that returns a basic overview of the specified cryptocurrency based on a fake quotation:

@RestController

@RequiredArgsConstructor

public class AdviceController {

private final AdviceService adviceService;

@GetMapping("/advice/overview/{cryptoSymbol}")

public ResponseEntity<String> generate(

@PathVariable CryptoSymbol cryptoSymbol

) {

return ResponseEntity.ok(adviceService.overview(cryptoSymbol));

}

}The path variable is of the CryptoSymbol type, which is an enum listing the cryptocurrencies supported by our application:

@Getter

@RequiredArgsConstructor

public enum CryptoSymbol {

BTC("Bitcoin"), ETH("Ethereum"), SOL("Solana");

private final String title;

}The core logic of our service will be implemented in AdviceService, where we are going to inject an instance of the OpenAIChatModel and perform a prompt call to the GPT model. There we create a simple user message asking for a short overview of the specified crypto based on its current quotation. When building the OpenAIChatOptions parameter that is passed to the prompt constructor, we specify the model, the temperature, and the quotation function. By doing so, we inform the model that the "CurrentQuotation" function is available for it to call if necessary (in this case, it will always be).

@Service

@RequiredArgsConstructor

public class AdviceService {

private final OpenAiChatModel chatModel;

public String generate(CryptoSymbol cryptoSymbol) {

UserMessage userMessage = new UserMessage(

"""

Give me a short overview of the current %s (%s) quotation followed by a brief trend assessment.

""".formatted(cryptoSymbol.getTitle(), cryptoSymbol.name())

);

var chatResponse = chatModel.call(

new Prompt(

List.of(userMessage),

OpenAiChatOptions.builder()

.withModel("gpt-3.5-turbo")

.withTemperature(0f)

.withFunction("CurrentQuotation")

.build()

)

);

return chatResponse.getResult().getOutput().getContent();

}

}Now we need to implement the CurrentQuotation function and make it available in the application container. Let's create a class QuotationFunctionMock that implements the Function interface providing a Quotation object with mock data:

public class QuotationFunctionMock implements Function<QuotationFunctionMock.Request, Quotation> {

public record Request(String cryptoSymbol) {}

@Override

public Quotation apply(Request r) {

return new Quotation(

r.cryptoSymbol(), "", 25000,26000,1317774231.5192668

);

}

}The Quotation class is a simple record with price and volume information:

public record Quotation(

String symbol,

String name,

double priceUSD,

double priceYesterdayUSD,

double volumeYesterdayUSD

) {}Finally, we create a configuration class that provides a bean of the type FunctionCallback. This object will wrap an instance of our QuotationFunctionMock class and hold information about the function (name and description). The name attribute should match the one we used in the AdviceService ("CurrentQuotation") and the description should instruct the chat model about what the function does and how to call it.

@Configuration

public class BeanProvider {

@Bean

public FunctionCallback quotationFunction() {

return FunctionCallbackWrapper.builder(new QuotationFunctionMock())

.withName("CurrentQuotation")

.withDescription("""

Get the current quotation of the cryptocurrency by its symbol.

Available symbols are: %s

""".formatted(

Stream.of(CryptoSymbol.values()).map(CryptoSymbol::name)

.collect(Collectors.joining(", "))

))

.build();

}

}Now we have everything set up to try our overview endpoint out. Remember to set the environment variable OPENAI_API_KEY to your OpenAI key. By calling the endpoint http://localhost/advice/overview/BTC using curl, some HTTP client, or even your browser, you should get an (outdated) overview about Bitcoin based on the mock information our function provided:

The current quotation for Bitcoin (BTC) is $25,000. The price yesterday was $26,000. The trading volume yesterday was $1,317,774,231.52.

Based on this information, there seems to be a slight decrease in the price of Bitcoin compared to yesterday. The trend assessment indicates a downward movement in the price.Answering a question using real-time data

Now it's time to make our application a bit more interesting. Let's create a new service allowing the user to ask a question about the cryptomarket to the chat model. First, create a record called Question containing the single string field text. Then, create the following method in the AdviceController class:

@RestController

@RequiredArgsConstructor

public class AdviceController {

...

@PostMapping("/advice/question")

public ResponseEntity<String> generate(

@RequestBody Question question

) {

return ResponseEntity.ok(adviceService.answer(question.text()));

}

}Then we implement a method called answer in the AdviceService that works similarly to the overview method, but passing two messages to the model instead:

(i) A system message with instructions of how to properly answer the user about the cryptomarket using the available tools (our quotation function, in this case). The prompt is designed so that the model will (hopefully) only answer questions related to cryptocurrencies (ii) A user message containing the question from the client input

@Service

@RequiredArgsConstructor

public class AdviceService {

private final OpenAiChatModel chatModel;

private final ChatProperties chatProperties;

...

public String answer(String text) {

SystemMessage systemMessage = new SystemMessage("""

You are a helpful AI assistant that provides insight into cryptocurrencies based

on realtime quotations. Answer the user question based on your knowledge about the

market and the available tools for retrieving quotation info as needed. If the user's

question has nothing to do with cryptocurrencies or the associated markets,

just say you don't know the answer.

\s""");

UserMessage userMessage = new UserMessage(text);

return getChatResponse(List.of(systemMessage, userMessage))

.getResult().getOutput().getContent();

}

private ChatResponse getChatResponse(List<Message> messages) {

return chatModel.call(

new Prompt(

messages,

OpenAiChatOptions.builder()

.withModel(chatProperties.getModel())

.withTemperature(chatProperties.getTemperature())

.withMaxTokens(chatProperties.getMaxTokens())

.withFunction("CurrentQuotation")

.build()

)

);

}

}Connecting to an external API

At this point, everything should work fine using our fake data. In order to start working with real-time information, we need to wire our application up with some external API. For the sake of simplicity, we will use the RestTemplate class for that purpose, which is already included in the Spring Web Framework. You should opt for WebClient in your applications instead, since it's a more modern, non-blocking, and reactive solution.

For this demo application, I chose to use the DIA Data API, which provides simple crypto information for free and does not require the use of an authorization key (up to this date). Let's create a new service that will be responsible for retrieving the latest quotation info for a given cryptocurrency.

@Service

@RequiredArgsConstructor

public class QuotationService {

private final RestTemplate restTemplate;

private final QuotationProperties props;

public Quotation fetch(CryptoSymbol cryptoSymbol) {

Map<String, Object> responseMap = restTemplate.getForObject(

props.getApiUrl() + "/" + cryptoSymbol.name(), Map.class

);

if (responseMap == null) throw new RuntimeException("Could not fetch quotation");

return new Quotation(

(String) responseMap.get("Symbol"),

(String) responseMap.get("Name"),

(Double) responseMap.get("Price"),

(Double) responseMap.get("PriceYesterday"),

(Double) responseMap.get("VolumeYesterdayUSD")

);

}

}The QuotationProperties configuration class and the associated properties can be found on the project repository. Now we must create a new quotation function class that uses this service instead of just returning an object with mock data:

@RequiredArgsConstructor

public class QuotationFunction implements Function<QuotationFunction.Request, Quotation> {

private final QuotationService quotationService;

public record Request(String cryptoSymbol) {}

@Override

public Quotation apply(Request r) {

return quotationService.fetch(CryptoSymbol.valueOf(r.cryptoSymbol()));

}

}Then we must modify the BeanProvider configuration class to inject the QuotationService when creating the QuotationFunction object and also to build and provide the RestTemplate object:

@Configuration

public class BeanProvider {

@Bean

public FunctionCallback quotationFunction(@Autowired QuotationService quotationService) {

return FunctionCallbackWrapper.builder(new QuotationFunction(quotationService))

.withName("CurrentQuotation")

.withDescription("""

Get the current quotation of the cryptocurrency by its symbol.

Available symbols are: %s

""".formatted(

Stream.of(CryptoSymbol.values()).map(CryptoSymbol::name)

.collect(Collectors.joining(", "))

))

.build();

}

@Bean

public RestTemplate restTemplate(RestTemplateBuilder builder) {

return builder.build();

}

}Running and testing the new service

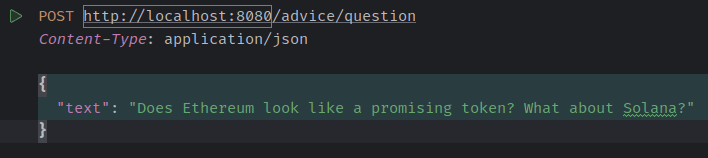

Let's run the application and perform a post request to our new endpoint:

By sending the question above, you should probably get a response based on two external API calls (one for each mentioned cryptocurrency). This is what I got in 29/07/2024:

As of the latest data:

- Ethereum (ETH) is currently priced at $3,372.99 USD. Yesterday, its price was $3,265.43 USD, and the trading volume was $2,634,234,950.99 USD.

- Solana (SOL) is currently priced at $192.13 USD. Yesterday, its price was $185.88 USD, and the trading volume was $581,814,086.53 USD.

Based on the current prices and trading volumes, both Ethereum and Solana show positive movements. Ethereum is a well-established token with a strong market presence, while Solana has been gaining popularity for its fast transactions and scalability. Both tokens have shown promising performance in the market.Notice that the answer is based both on the data retrieved by the external API and its own knowledge about Ethereum and Solana.

Conclusion

In this short tutorial we created a Spring API for getting advice about cryptocurrencies using a chat model and up-to-date quotation data. Despite its simplicity, the application demonstrates an interesting interplay between the foundational knowledge of a large language model and external information. By integrating the model with more complex data, like price history, moving averages, etc, it should be possible to design powerful applications for providing insight into the market in real-time.

Resources

- Project source code on GitHub